source ~/.bashrc

df -h

uname -m && cat /etc/*release

gcc –version

sudo apt install git

conda create –name lf python==3.10

conda activate lf

conda install pytorch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 pytorch-cuda=12.1 -c pytorch -c nvidia

python -c ‘import torch;print(torch.__version__)’

python -c ‘import torch;print(torch.cuda.is_available())’

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

cd LLama-Factory/

pip install -e .[metrics]

如果要多卡训练:pip3 install deepspeed==0.14.0

单卡启动:CUDA_VISIBLE_DEVICES=0 python src/webui.py

可选:nohup CUDA_VISIBLE_DEVICES=0 python src/webui.py

多卡启动:CUDA_VISIBLE_DEVICES=0,1 python src/webui.py

| 参数 | 参数说明 |

| version | 显示版本信息 |

| train | 命令行版本训练 |

| chat | 命令行版本推理 chat |

| export | 模型合并和导出 |

| api | 启动 API server,供接口调用 |

| eval | 使用 mmmlu 等标准数据集做评测 |

| webchat | 前端版本纯推理的 chat 页面 |

| webui | 启动 LlamaBoard 前端页面,包含可视 |

llamafactory-cli train \

–stage sft \

–do_train True \

–model_name_or_path /root/model_path \

–preprocessing_num_workers 16 \

–finetuning_type lora \

–template qwen \

–flash_attn auto \

–dataset_dir data \

–dataset name \

–cutoff_len 4096 \

–learning_rate 5e-05 \

–num_train_epochs 100.0 \

–max_samples 100000 \

–per_device_train_batch_size 4 \

–gradient_accumulation_steps 8 \

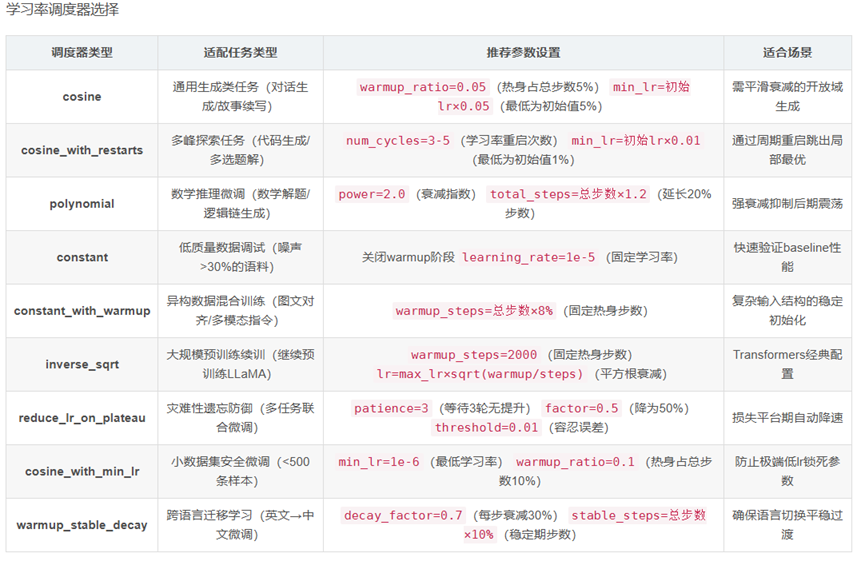

–lr_scheduler_type cosine \

–max_grad_norm 1.0 \

–logging_steps 5 \

–save_steps 100 \

–warmup_steps 0 \

–packing False \

–report_to none \

–output_dir saves_path \

–fp16 True \

–plot_loss True \

–trust_remote_code True \

–ddp_timeout 180000000 \

–include_num_input_tokens_seen True \

–optim adamw_torch \

–lora_rank 8 \

–lora_alpha 16 \

–lora_dropout 0 \

–lora_target all \

llamafactory-cli train examples/train_lora/qwen_lora_sft.yaml

保存并导出完整模型

启动合成后模型

CUDA_VISIBLE_DEVICES=0 llamafactory-cli webchat \

–model_name_or_path /root/Qwen2.5-7B-Instruct \

–template qwen

json文本报错

数据集切分报错,json文件可能包含字节顺序标记

解决方法:使用notepad++转换